Zvork: the man behind Oberon 2

As many of you already know, Oberon 2 is on its way to the rack of Reason 9 users in the form of a paid upgrade. On this occasion we talked to Zvork the the man behind Noxious, Oberon, Le Space, etc. We asked him about his background, the early days of Rack Extensions, and future plans of course. We hope you will enjoy!

The Beginnings

RT(tiker01): Can you tell us a little bit about yourself?

Zvork: First of all, I’m based in Toulouse, France, country of duck, foie gras and Airbus. I am originally a computer science engineer who started his career in virtual reality and real time 3D in general, be it for the industry or video games. But during my academic years, I had for many years hesitated between graphics and sound since I was also a big fan of synths and sound synthesis in general. So I did a degree in electrical engineering and signal processing… which is now really helpful.

RT(Joey): And when did you first start working in audio and DSP programming?

Zvork: About 10 years ago I worked in a small company called Longcat Audio, who specialized in spatialized audio rendering, mostly around binaural rendering, as technical director and co-founder. That was my first pro experience of DSP programming and contact with the pro musical world, but went back to video games on a graphics role afterwards.

RT(Joey): So what brought you back to audio and how did you come to be a RE developer?

Zvork: I transitioned to freelance about 5 years ago, wanting to build on ideas that I had in image and sound. And six months after that the first RE SDK was released. Since then, I can say that I’m a full time RE developer… periodically. When I have an ongoing RE project, I am dedicated full time. When I’m not, I’m either researching ideas or doing contract work for clients, mostly on the image side.

RT(Joey): And how about on the music side of things? Do you play any instruments?

Zvork: On the music side, I am a lousy, self-taught keyboard player, a lousier guitarist and an even lousier violinist. More seriously, I dabble with my keyboard and I am really grateful that we have computers now.

RT(tiker01): What kind of music are you creating? Anywhere one could listen to a piece?

Zvork: It’s hard to define. I think it’s mostly calm and cool stuff, with a gentle rhythmic nod of the head and finger snapping. My latest stuff is slightly more funky, but I haven’t uploaded music in a while. I’ve just checked my Soundcloud activity and my personal uploads date back 5 years ago. If you check out the Zvork account there, the 5 or 6 uploaded tracks from that period are personal stuff (“Strolling” to “Lost of sanity”). But all done on Reason with stock devices, except “Improvisation” which was originally made on Cubase, an Atari ST and a Roland U-20 keyboard (which is still my master keyboard, poor thing), and then ported to Reason. And please don’t criticize the mix quality 🙂

RT(tiker01): Ah, so you are you using Reason in your own music production! How and when did you hear about Reason?

Zvork: I discovered Reason through a friend, around version 2, if I remember correctly. He was a semi-professional musician and composer and was excited by the modular cable based interface. So I jumped into it and after many years of Cubase, I switched to Reason. It is still my only DAW for all my music production, partly because I don’t have the time and curiosity to check out other competitors and partly out of habits, probably. Also, because I don’t have any real pro needs and requests. Music is mostly a hobby for me. I must say I am sorry the Props haven’t really pursued this totally modular non-linear idea that they had. I think it would make Reason really stand out… even more than it does now. But then again, musicians are a very strange bunch so I can understand if the Prop’s market studies say they shouldn’t. 🙂

RT(tiker01): By the way, where does the name Zvork come from?

Zvork: That’s just a stupid pseudo that I’ve been using from my teenage years when role playing. Not sure it really is helpful in the audio context. And it is very bad as a company name because you’re rejected at the end of the list. Plumbers, who are far more business aware, always have names starting with “A”, for instance. So if I strike it big, expect me to change to Azvork.

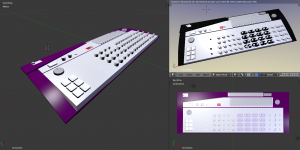

RT(tiker01): If one visits your website or Facebook it can be seen that you make quite nice renders of your devices. How much has your background helped in RE GUI design and which skills were the most used?

Zvork: It has helped a lot I think. I have always been interested in industrial design, do a bit of photography too, so I really appreciate the aesthetics of an object and am accustomed to certain aspects of making beautiful images. But I am not a dedicated pro computer artist even though I’ve worked among these guys for years! I am also proficient with tools like Photoshop or 3DS Max due to my background… or Blender which is the main 3D tool I use for the RE GUI designs and renders. I have also spent a lot of time working on GUI in my previous roles. Believe it or not, but this actually takes a lot of time when designing software. Making great GUI is extremely challenging, especially when we are addressing creative people who don’t like to be hindered. Or your device better be the killer app of the century and they can’t work without it! This isn’t the case for Zvork, yet.

RT: Do you suffer from additive [synthesis] addiction 🙂 ?

Zvork: Yes + No. Let us say that it is more out of pragmatism. First of all, I’ve spent a lot of time building the additive engine that was at the heart of Noxious and didn’t want to throw away that work, especially as I wasn’t entirely satisfied with the first version. Second, it is the only thing that differentiates my synths from the rest of the bunch in Reason (master Parsec aside, of course). Third, when you work on a technology, you keep getting new ideas on how to improve it. Some consequences of using additive synthesis are really cool, such as alias-free audio and the ability to easily create metallic, screaming FX and bell sounds. It’s great also alongside an analysis engine. But it is hard to tame, and for most of the sounds out there, it is a bit overkill for the simple user! The real challenge is mostly on how to control it in a creative and inspiring way; not really the engine in itself. So rest assured, I feel my journey has ended with additive synthesis at least as the main characteristic of a synth. For the future, I will probably reuse the engine for effects or as some sub-module of a synth because it is a great asset to have… once you have it.

The journey of a Rack Extensionist

RT(tiker01): Recently we had a chat with Buddard from RoboticBean and we asked why did he start with CV Rack Extensions. He said “I think CV devices are by far the easiest way to get into RE development…..I could focus more on getting to know the SDK.” Were you thinking along the same line and focused on CV devices in the beginning or something else motivated you?

Zvork: I’ve read the interview and most definitely agree on this. The SDK is really novel and particular in certain aspects and has a learning curve, especially because it is much more constrained than other plugin SDKs I’ve encountered like VST. So you need to get a grasp of what you can and can’t do. But I began working on CV devices also because I felt a bit rusty on the DSP side and didn’t have any idea of the market size for Rack Extensions. After I felt comfortable with the SDK and had a development pipeline that seemed more efficient, I decided to try something more ambitious. That became Noxious, an early work with flaws and errors because there is more than SDK and DSP knowledge into making a synth. You are making a musical instrument and that is really specific. CV devices are extremely easy to invent in comparison.

RT(ScuzzyEye): You said you use Blender for the 3D. Anything special for the 2D textures?

Zvork: Photoshop CC for texturing. Nothing very fancy. Software tools have become cheaper but there are always some cool ones coming out. But when it comes to building GUIs for RE, the amount of detail you can put in the 754px wide frame is pretty limited. So I haven’t really felt the need for anything fancier.

RT(ScuzzEye): I believe we both have a background in a different type of digital signal processing. That being the 2D, visual type. Before I got into audio my research was into digital image acquisition. There’s quite a bit of aliasing that happens there.

Zvork: True, moiré? I’m more into image synthesis and temporal anti-aliasing has “completely” solved the problem, now. But in audio, it’s amazing at how much research there is in anti-aliasing and band limited stuff.

RT(ScuzzyEye): If you can apply audio tricks to images, you can get amazing results. I think it’s because the ear is more sensitive to small changes than the eye.

Zvork: Absolutely, but the reverse isn’t that obvious. And it’s hard sometimes to discover that what you thought was an audio defect, or something very pretty in terms of “precision”, is accepted as “musical” or “interesting”. I think that’s the hardest part when working with musicians. I feel there is much more subjectivity or things that are difficult to grasp. For instance, what is a “warm” sound? I’ve come across a scientific article that tries to assess that quantitatively, but it’s not very precise. While if you are talking about images, the vocabulary is much more precise and shared. These things are the hardest things I feel and that’s why Oberon and Noxious are far from “perfect” musically; I still have to nail this. And again, I think we are trained to be all on the same page to what “bright” image is, or “pale” is, or “cold” is, etc… In case of sound, it’s not as clear to everyone.

RT(tiker01): A hot topic. As a developer, what do you think about the progress of SDK 1-2-2.5?

Zvork: This is going to be a long answer. I have started with the first initial release of the SDK and have been really appreciative of the design work involved in it. As an engineer I am very sensitive to clear, coherent designs, and a lot of stuff in the SDK, albeit with its constraints, made me feel really confident this was a well thought out system, future proof and all. The integrated shop and copy protection in itself were two major arguments for going into the RE business, by the way, not talking of multi-platform compatibility which is also a big issue when designing software. So this whole initial package of SDK and shop was really attractive. I for one, really like the 3D element based design of the UI (again, maybe because I was already familiar with working with 3D modelling packages). There were a number of constraints and small quirks but I was confident they would be resolved.

The second version of the SDK came out with Reason 7 and added custom displays, which was a major upgrade. Unfortunately, I have been internally pretty critical on how it was implemented, as I have hit numerous technical “hurdles” when developing Oberon. Without infringing the NDA, let us say it felt less thought out, has a lot of particularities that makes it difficult to reuse existing UI creation processes or the Props didn’t seize the opportunity to lift certain constraints on the original widget based system that weirdly didn’t apply to custom displays. Developing an RE with a normally complex custom display with, I believe, a decent basic level of quality, demands a big effort, bigger than I believe is necessary. So this is challenging when you are designing a RE as it forces me to try to do it in priority with the traditional knobs and buttons – which isn’t necessarily a bad thing for the user – because if I try to do it with custom displays… it will take much much more time. I have also on numerous occasions had to backtrack on a specific idea that I had after finding out that it was impossible to make the GUI that I imagined originally.

Recently the new SDK came out with great enhancements like sample loading. Unfortunately, again, a number of things feel rushed and awkward, while the initial constraints and quirks of the initial release are still there. So I don’t want to sound too critical but let us say that I am a bit… annoyed by the SDK right now. 🙂 But if I look at it globally, with shop and all, I still think it’s a good system… with some serious flaws that should have been fixed by now.

RT(tiker01): How did you come up with the name of your plugins?

Zvork: Out of nowhere! I think Noxious is about the sound that came out of it originally. It wasn’t warm, cold and a bit nasty. Oberon… well it was originally “Oberton” until EnochLight, who was beta testing, pointed out that a Bulgarian company building speakers was called Oberton. Oberon was a lame transformation of “Uber-ton” a German-ish inspired name. We all now Germans do it better in terms of engineering. 🙂

LeSpace is a pun of some sort. L’espace is the french equivalent of “the space” and, as someone pointed out in the PUF when it came out, it was also a tribute to the guys in the marketing team at Renault in the 80’s who decided to call the Renault 5 car in the US “LeCar”. Very bad, but it did have a sort of French touch to it. 🙂

Current projects

RT(tiker01): Those who follow you on social media already know that you are working on Oberon 2.0. Could you share with us some details about what the key new features are?

Zvork: They are mostly all linked to the new SDK which has lifted some previous constraints and added new features, primarily, as you are aware, sample loading. So you will be able to import audio samples into Oberon, in place of the user waves. These samples are analyzed and transformed into a sequence of partial spectrums that can be played back like any of the built-in wave sequences.

Since Noxious, I have been thinking on how to implement audio analysis/resynthesis but without having a really good algorithm. Thankfully, I’ve managed to pull that trick for Oberon 2. It is not perfect as, with any resynthesis method based on additive synthesis, there is a bit of phasing going on, but for instruments it works very nicely. When you throw a really complex sound spectrum at it like an explosion, it’s not as perfect 🙂 So basically you get sample playback without the time stretching involved in classical audio sample playback when played in pitches far away from the original sample pitch. To nicely implement sample playback complete with looping and all, we also enhanced the envelopes with independent start and end loop points.

On the oscillator side, samples aren’t the only novelty. There will also be modifiers to the wave shapes. So now, after drawing your wave shapes you will be able to add FM modulation, Sync modulation, etc… This will work for both shapes of a wave so you can interpolate between FM intensities, etc. This, I must say, has really broadened the soundscape of Oberon. But there is one drawback: these modifiers can’t be modulated, sorry for that.

Finally, there are a lot of GUI enhancements and changes. For instance the modulation matrix has been moved to the central display which lets us create nice ordered popup menus for the selection of modulation destinations, for instance.Oh, and there will also be a special audio output mode for users of Blamsoft’s Polymodular system who want to integrate Oberon.

RT(tiker01): Could you share any examples the aforementioned new features of Oberon makes possible?

Zvork: The wave modifiers are really interesting as it adds a whole new palette of sounds that were difficult to obtain in the first version. Drawing curves is quick but gets tedious when you want to draw some resonance ondulations, for instance. With FM modulation, you can easily get formant rich sounds that combined with the spectrum interpolation mode are quite interesting. Sample loading should let you do some morphing between samples. There’s a patch, “Bell to Sitar”, that demonstrates that. But musical pertinence of the results depend a lot on the samples. Sample loading is first of all a new source for sound experimentations as it gives access to an infinite amount of user spectrum sequences. On a more day to day aspect, it is also a very quick way of playing samples. Just drag and drop a sample with a loop and you very quickly can play it without that annoying time stretching effect. Vibrato rate stay the same across the keyboard, etc…

RT(tiker01): As changeable panels are an option in SDK 2.5, have you considered adding FX to Oberon 2.0?

Zvork: Yes, I have, but not necessarily because of the new option of using changeable panels. There is this old debate of whether synths should have onboard effects and I have, for both of my synths, decided that I’d rather spend time on the synth engine. Especially as in Reason, with the combinator, you can group your synth with great stock and third party effects and bundle that as a patch. Now, business-wise, this seems to be a very bad idea as the majority of clients buy a synth for the patches it provides and these have to be polished with effects and all. Hiding the synth in a combinator feels awkward too, because you’re hiding the synth, visually I mean, and I feel there is this unspoken feeling when you go that route (which I have, for the moment) that it isn’t the synth that’s shining but the effects. Where as when you are using onboard FX, the feeling is more along the line of “it’s entirely the synth doing this”.

Maybe I’m wrong, but this is how I analyze the situation and this stuff is a bit subjective. So I know that onboard FX are somehow an obligation now, at least a delay and a chorus. But on the other hand, I feel Oberon has a certain characteristic to it, and a certain complexity. I didn’t want to dump another layer of complexity and UI clutter on something already different. The only justification would be if the effect was unique and blended closely with the engine or would be a per voice effect and, believe me, I spent some time trying out some stuff. I tried adding soft clipping per-voice with drive control but I had a hard time keeping aliasing under control, for instance, and since alias-free audio is one of the characteristics of additive synthesis, it felt like a hack. But if I do a next instrument, there will certainly be some amount of onboard FX.

Future

RT(tiker01): Do you plan to update any of your other Rack Extensions using SDK 2.5?

Zvork: I’m not sure about that. A good candidate would be Noxious that could benefit from it on the UI side. But I’m not sure it would be interesting market-wise as Noxious isn’t very appreciated by the Reason user-base 🙂 So, basically, no updates right now… sorry about that.

RT(tiker01): Any new Rack Extensions planned for 2017, perhaps something utilising the new SDK?

Zvork: We have two ideas in the pipeline. One is based on sample loading and analysis / resynthesis and would be an instrument based on the analysis engine built for the upcoming Oberon update. We can’t announce any dates and give any more details, sorry about that. It’s not that I am particularly secretive but a lot of things can happen along the way, not the least being what comes out in the shop from other vendors. The other one is another synth but not additive. This is an ongoing project still in R&D phase which may not transform itself into a rack extension, and maybe not into a product at all, for that matter. There are a lot of great synths out there and it is difficult to find something really original, musical, CPU friendly and that can be done with the SDK. The same goes for effects of course.

RT(tiker01): Thank you for this extensive interview. It was a real pleasure to talk to you. Can you give our readers a clue when Oberon 2 will hit the shop?

Zvork: The beta tests are nearly finished so hopefully sometime during the first half of April, which should be very soon. Thank you for the interview. The pleasure was mine too and I wish good luck to ReasonTalk!